Generative Adversarial Networks

GANs are a class of artificial intelligence algorithms used in unsupervised machine learning implemented by a system of...

Generative Adversarial Networks (GANs)🔗

Generative Adversarial Networks (GANs)🔗

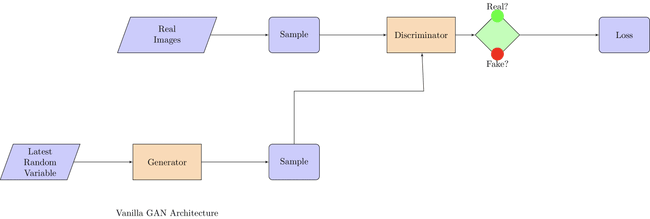

Generative Adversarial Networks (GANs) are a class of artificial intelligence algorithms used in unsupervised machine learning, implemented by a system of two neural networks contesting with each other in a game theoretic scenario. This method was introduced by Ian Goodfellow and his colleagues in 2014. GANs are used for generating data that is similar to some known input data. These networks are called generative because they generate new data instances, and adversarial because they use a game theory scenario to learn.

GAN Components🔗

-

Generator (G): It learns to generate plausible data. The input to the Generator is a noise vector, , drawn from a predefined noise distribution (e.g., Gaussian distribution). This randomness is what allows the Generator to produce a variety of outputs.

-

Discriminator (D): It learns to distinguish between instances of real data and fake data produced by the Generator. Its job is to estimate the probability that a given input data is real.

The Role of 🔗

- : Represents the random noise vector input to the Generator. It's sampled from a probability distribution (commonly a Gaussian distribution) and is the source of randomness that allows the Generator to produce diverse outputs. The dimensionality of can vary and is an important hyperparameter that influences the Generator's capacity to generate varied data.

Loss Functions🔗

The core idea behind training GANs is to use a game-theoretic approach where the Generator and the Discriminator have competing objectives. The loss functions capture these objectives.

Discriminator's Loss Function🔗

The Discriminator's goal is to correctly classify real data as real and fake data (generated by the Generator) as fake. Its loss function is typically a Binary Cross-Entropy (BCE) loss, which for a given batch of data can be detailed as:

- represents real data instances.

- represents fake data generated by the Generator from noise .

- is the label indicating real (1) or fake (0) data.

- is the Discriminator's estimate of the probability that is real.

In practice, this loss is split into two parts:

- Real Loss: For real data, where , focusing on .

- Fake Loss: For fake data generated by the Generator, where , focusing on .

Generator's Loss Function🔗

The Generator's goal is to produce data that the Discriminator will mistakenly classify as real. Its loss can also use the Binary Cross-Entropy (BCE) form, focusing on fooling the Discriminator:

Here, the Generator tries to maximize the likelihood of the Discriminator being wrong, meaning it wants to be as close to 1 as possible, indicating that the Discriminator thinks the fake data is real.

Training Dynamics🔗

-

Update Discriminator (D): Train to maximize its ability to differentiate real data from fake. This involves optimizing to recognize real data as real and generated data as fake.

-

Update Generator (G): Train to minimize by generating data that will classify as real. This step uses the gradients from to update , making more realistic.

Iterative Optimization🔗

- The training involves alternating between optimizing and , gradually improving the quality of generated data and the accuracy of 's classifications.

- The Generator starts with random noise (via ) and learns to map this to the data space in a way that is indistinguishable from real data to the Discriminator.

- The Discriminator improves its ability to distinguish real from fake, forcing the Generator to produce increasingly realistic data.

COMING SOON ! ! !

Till Then, you can Subscribe to Us.

Get the latest updates, exclusive content and special offers delivered directly to your mailbox. Subscribe now!